Enhancing Immersive Sensemaking with Gaze-Driven Smart Recommendations

Published in 30th Annual ACM Conference on Intelligent User Interface (IUI), 2025

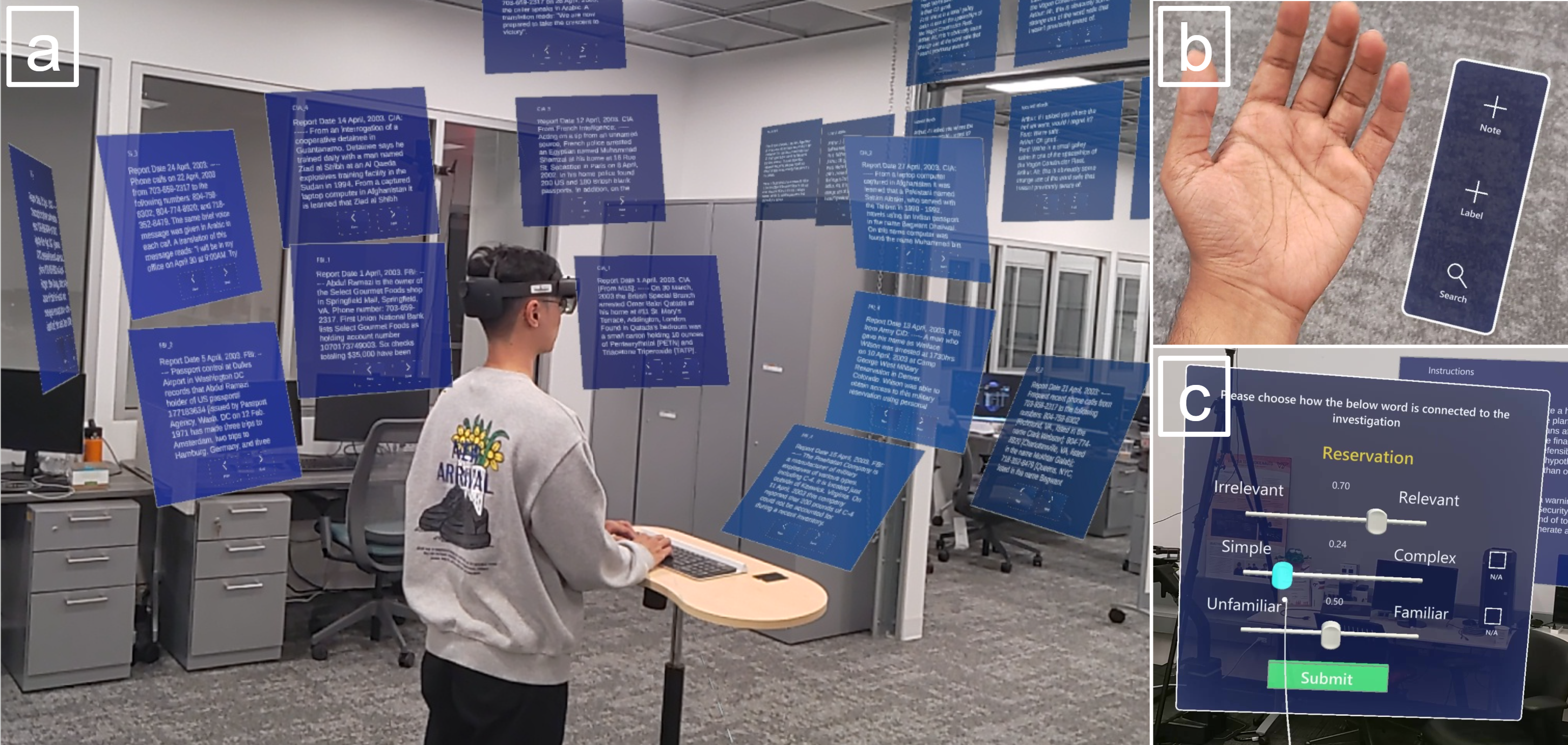

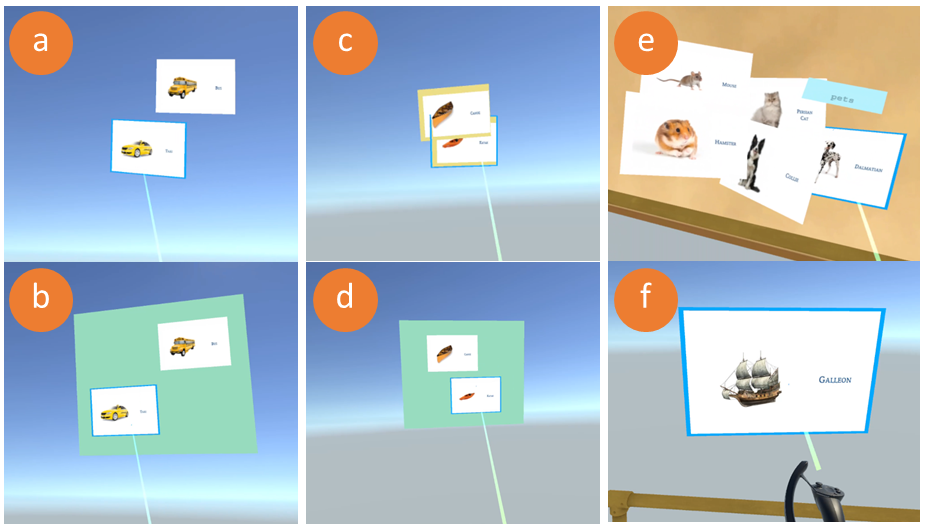

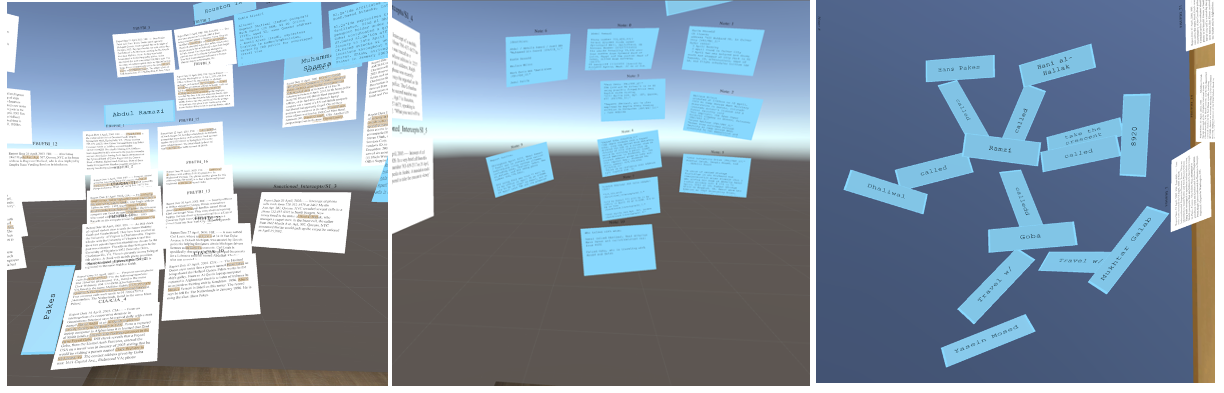

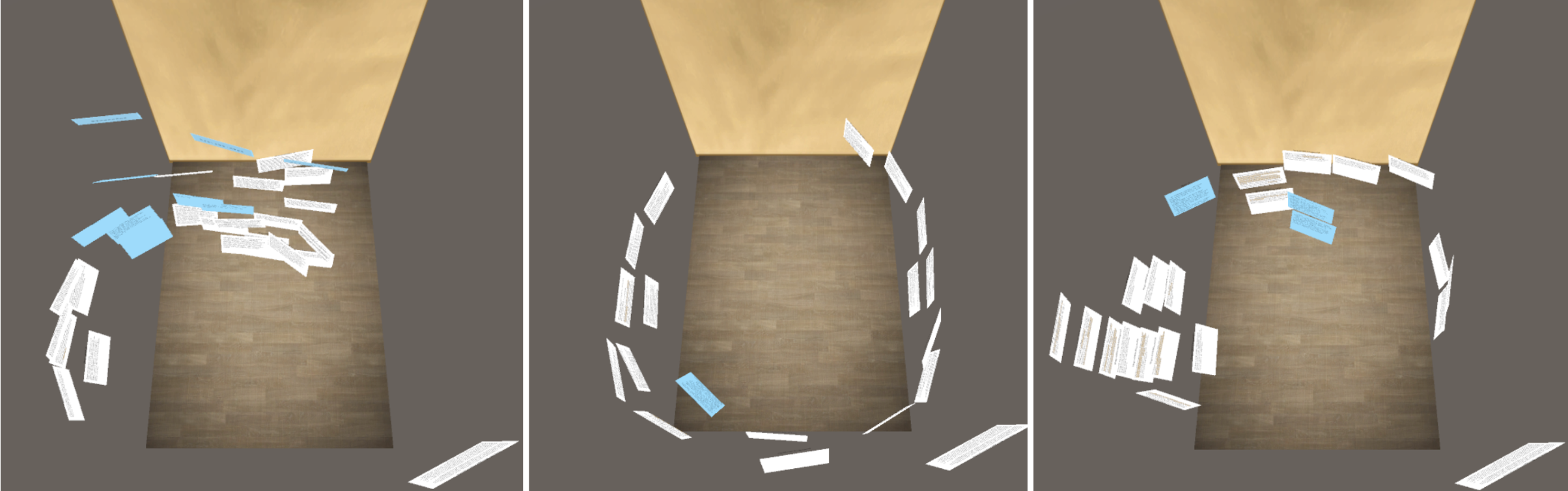

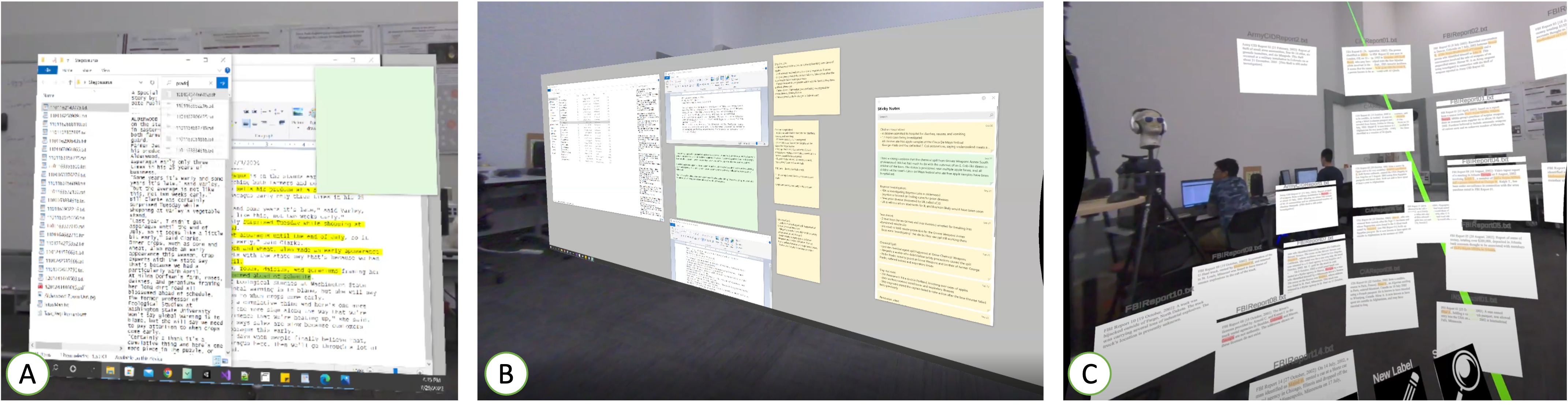

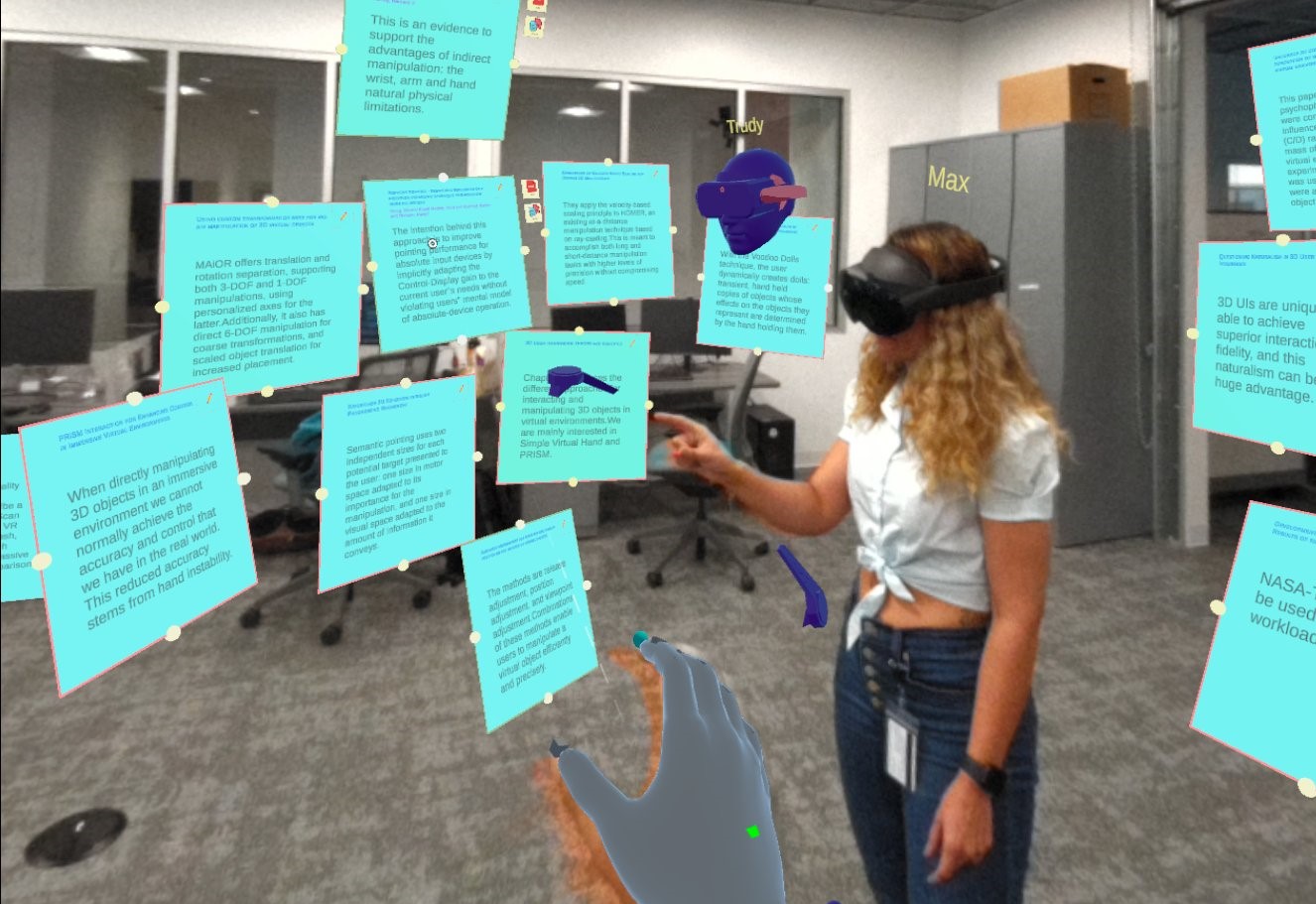

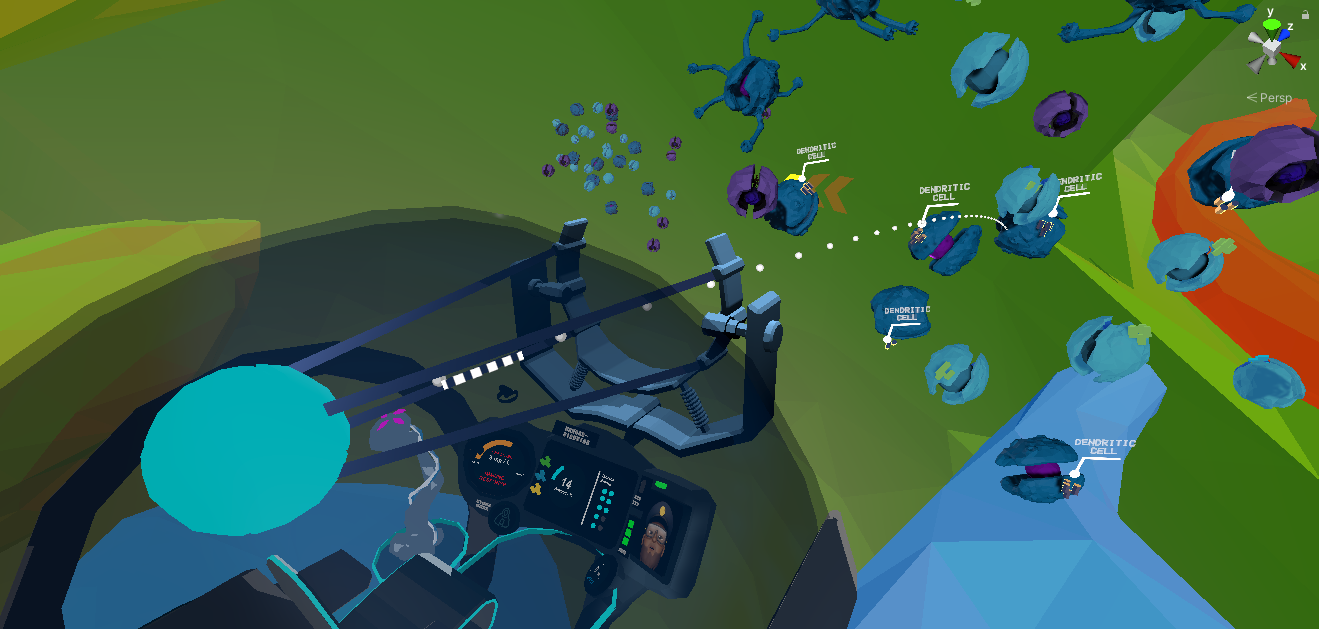

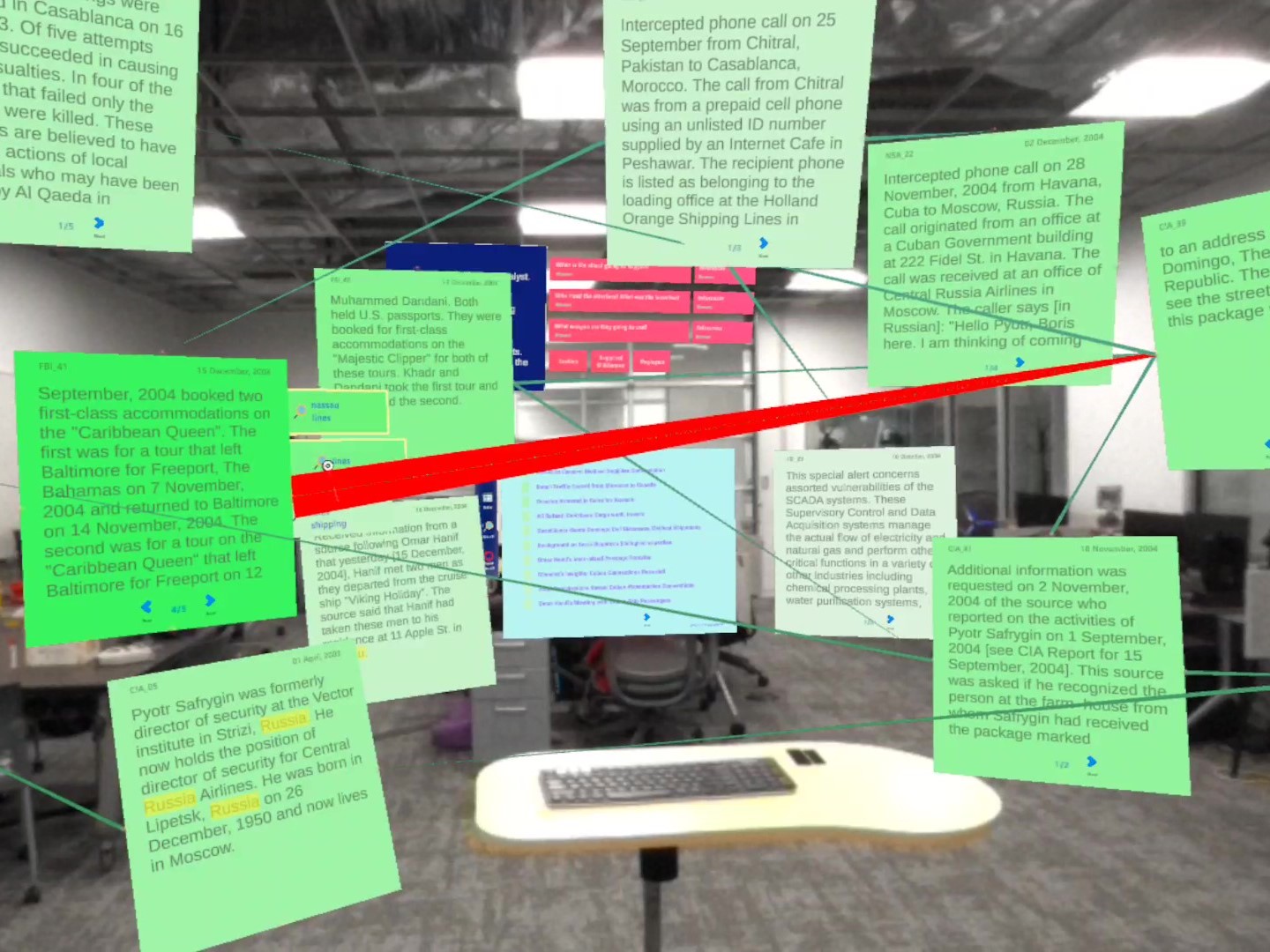

Sensemaking is a complex task that places a heavy cognitive demand on individuals. With the recent surge in data availability, making sense of vast amounts of information has become a significant challenge for many professionals, such as intelligence analysts. Immersive technologies such as mixed reality offer a potential solution by providing virtually unlimited space to organize data. However, the difficulty of processing, filtering relevant information, and synthesizing insights remains. We proposed using eye-tracking data from mixed reality head-worn displays to derive the analyst’s perceived interest in documents and words, and convey that part of the mental model to the analyst. The global interest of the documents is reflected in their color, and their order on the list, while the local interest of the documents is used to generate focused recommendations for a document. To evaluate these recommendation cues, we conducted a user study with two conditions. A gaze-aware system, EyeST, and a Freestyle system without gaze-based visual cues. Our findings reveal that the EyeST helped analysts stay on track by reading more essential information while avoiding distractions. However, this came at the cost of reduced focused attention and perceived system performance. The results of our study highlight the need for explainable AI in human-AI collaborative sensemaking to build user trust and encourage the integration of AI outputs into the immersive sensemaking process. Based on our findings, we offer a set of guidelines for designing gaze-driven recommendation cues in an immersive environment.

Recommended citation: Tahmid, I. A., North, C., Davidson, K., Whitley, K., & Bowman, D. A. (2025, March). Enhancing Immersive Sensemaking with Gaze-Driven Smart Recommendations. In 2025 ACM Intelligent User Intefaces (IUI). ACM.

Read Full Paper | See Prototype