Sitemap

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Pages

Posts

Say Hello to Dr. Tahmid!

Published:

Case Study: Gaze Aware AI for Immersive Analytics

Published:

Audience: UX Designer & UX Researcher recruiters.

My role: End-to-end research + prototyping in MR; eye-tracking–driven AI cues; multi-study validation.

Internship at Qualcomm

Published:

I spent the Summer interning at Qualcomm, working in the XR Systems team. My contributions are as follows:

Attending NSF SHREC Annual Workshop

Published:

I’ll be attending the Annual Workshop organized by National Science Foundation (NSF) SHREC: Center for Space, High-Performance, and Resilient Computin in San Antonio, Texas from January 14-15, 2025.

EyeST Recommendation Paper accepted at IUI 2025

Published:

Our paper on “Enhancing Immersive Sensemaking with Gaze-Driven Recommendation Cues” has been conditionally accepted at the Intelligent User Interface 2025 to be held in Cagliari, Italy on March 24-27, 2025. Yay! Here’s a brief intro to what we did in this paper:

Aspire! Award for Self-Understanding and Integrity

Published:

I am humbled to share that I received the Aspire! Award for my pursuit of building common ground through creative, thoughtful, and impactful projects in service to the community at Virginia Tech. In a breakfast banquet in honor of all Aspire winners, Chris Wise, Assistant Vice President for Student Affairs, introduced me to the audience by sharing my work on celebrating cultural richness with International Mother Language Day, innovating new ways to make international students’ lives easier in the early days, and supporting the community of Bangladeshi students in Virginia Tech with my skills, experiences, and leadership. The Aspire! Awards honor students, faculty, and staff who embody the Student Affairs’ Aspirations for Student Learning.

Attended Tapia Conference in San Diego, CA

Published:

The Tapia Conference, officially known as the ACM Richard Tapia Celebration of Diversity in Computing, is an annual event that promotes diversity and inclusion in computing and technology fields.

Awarded Pratt Fellowship for 2024-25

Published:

I got awarded the prestigeous Pratt Fellowship for the Academic Year 2024-25. Learn about different graduate fellowships here.

I’m a Ph.D. Candidate Now!

Published:

I passed my preliminary exam today! Thanks to my professor and the committee for all the support and guidance. My dissertation topic is: Rich Semantic Interaction in Immersive Sensemaking. If you want to know more about the proposed projects, please feel free to reach out!

Our ISMAR Travel Got Featured in VT News

Published:

3D Interaction Group went to Sydney, Australia with a whopping array of seven papers and one competition entry. Check out our news here!

Four Projects Got Featured in CHCI

Published:

Four of my proejcts got featured in Virginia Tech CHCI. Check them out here!

news

portfolio

XR Prototypes

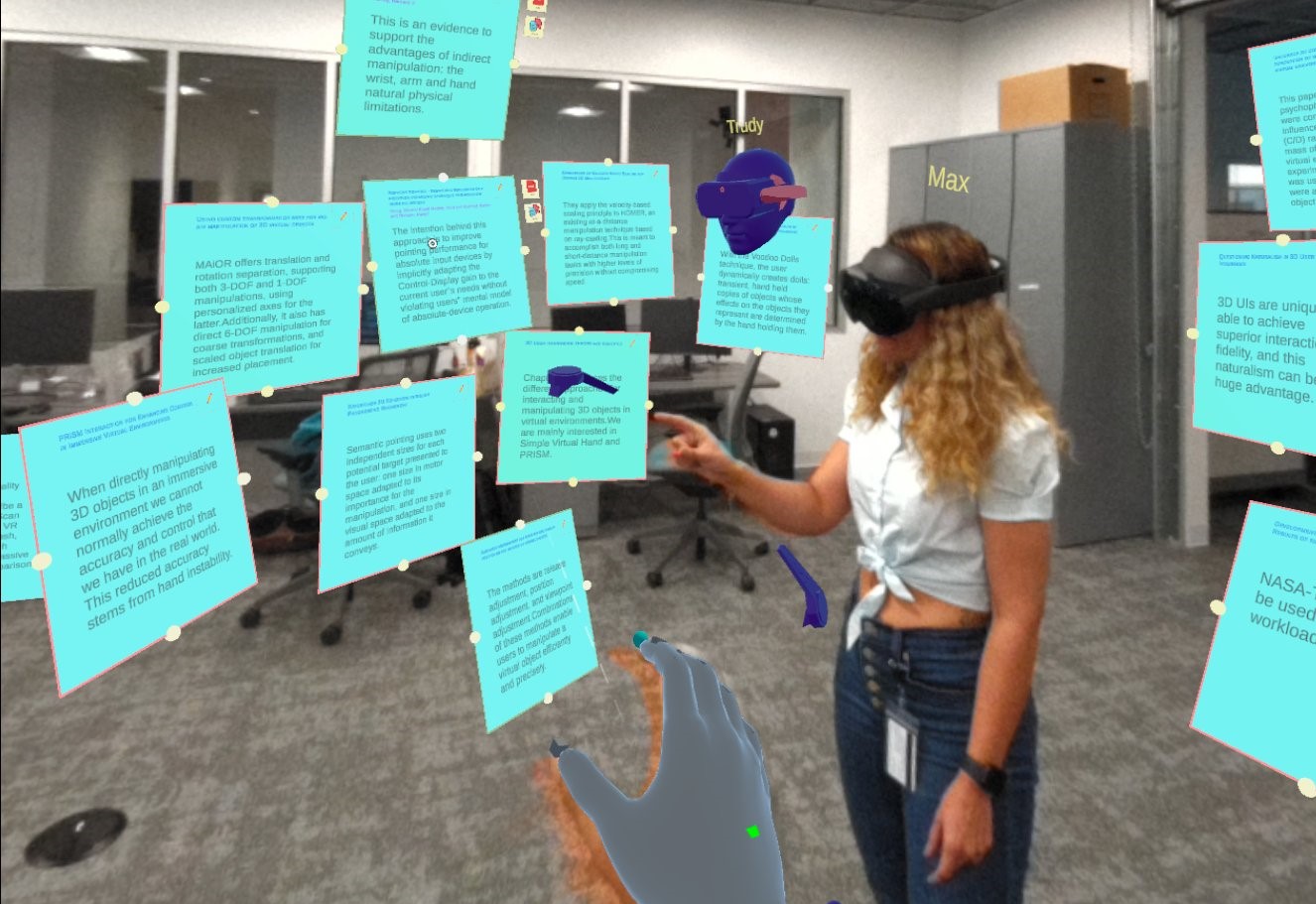

As an XR researcher, I’m constantly exploring the possibilities of immersive technologies through the development of XR applications. My primary focus lies in crafting novel 3D interaction techniques aimed at elevating the user experience within these immersive environments. What you’ll find inside is a selection of prototypes showcasing some of these explorations. These projects reflect my skills as a Unity developer, my innovative solutions for interaction design, and my ability to visually communicate complex concepts. The prototypes will provide a glimpse into the ongoing evolution of my work in shaping the future of XR interaction.

Presenting Ideas and Design Concepts

Good design is about more than just aesthetics; it’s about clarity, impact, and memorable storytelling. I apply these principles to every presentation I create, using minimalist designs to showcase ideas in a visually engaging and easy-to-digest format. Explore the different ways I presented my thought process to my peers.

Graphic Designs

Throughout my experiences with various organizations, I’ve had the opportunity to explore my passion for graphic design, primarily creating posters and banners for events and initiatives. I like playing with colors and perspectives to bring a minimalist and aesthetically pleasing approach to my work. This collection showcases a few examples of these designs, reflecting my personal style and the specific needs of each organization.

Photography

Photography is more than just a hobby for me. It’s a way of seeing and understanding the world. It’s a language spoken through light, shadow, and perspective, allowing me to translate emotions and tell stories that words sometimes fail to convey. Here is a small collection of images from my journey of self-understanding, each representing a moment deeply etched into my memory. Please visit my Instagram page to find the most updated collection.

prototypes

Collaborative Immersive Space to Think

Two or more people can join the immersive room to collaborate and ideate on different topics.

Collaboratively Inspecting Additive Manufacturing Defects in Immersive Space

Two or more inspectors can observe and identify defects in additive manufacturing models by sychronous and asynchronous collaboration.

publications

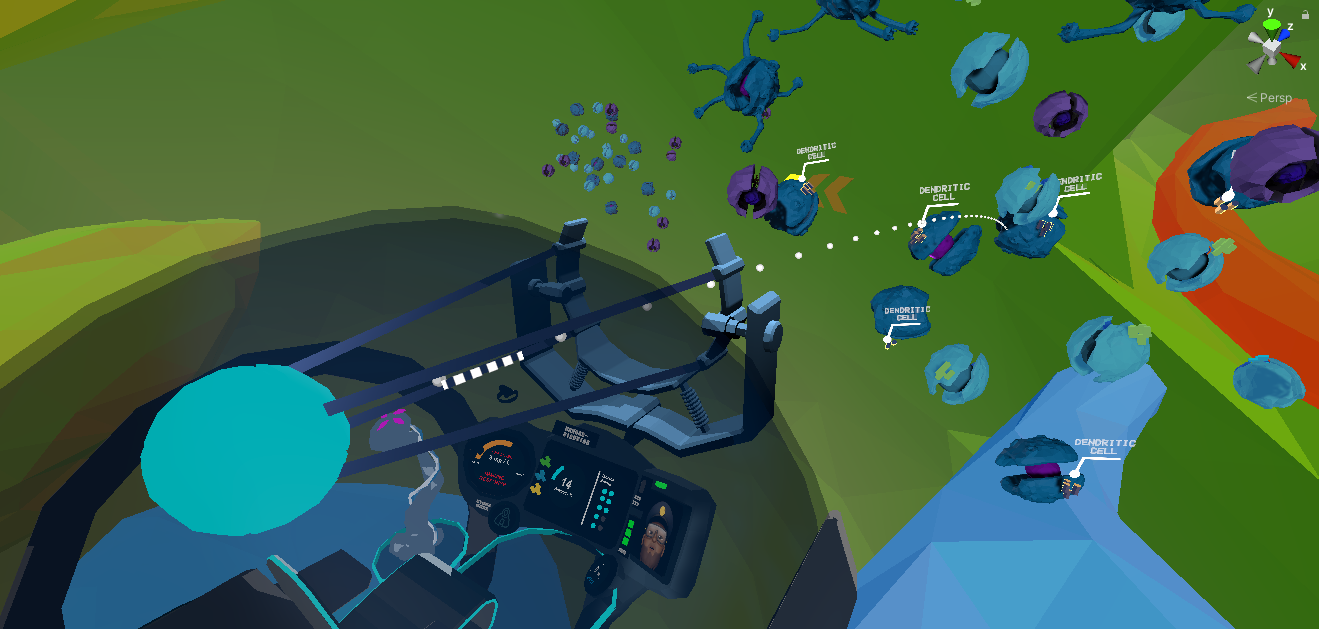

Fantastic voyage 2021: Using interactive VR storytelling to explain targeted COVID-19 vaccine delivery to antigen-presenting cells

Published in 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2021

Winner of the 3DUI Contest 2021

Science storytelling is an effective way to turn abstract scientific concepts into easy-to-understand narratives. Science storytelling in immersive virtual reality (VR) can further optimize learning by leveraging rich interactivity in a virtual environment and creating an engaging learning-by-doing experience. In the current context of the COVID-19 pandemic, we propose a solution to use interactive storytelling in immersive VR to promote science education for the general public on the topic of COVID-19 vaccination. The educational VR storytelling experience we have developed uses sci-fi storytelling, adventure and VR gameplay to illustrate how COVID-19 vaccines work. After playing the experience, users will understand how the immune system in the human body reacts to a COVID-19 vaccine so that it is prepared for a future infection from the real virus.

Recommended citation: Zhang, L., Lu, F., Tahmid, I.A., Davari, S., Lisle, L., Gutkowski, N., Schlueter, L. and Bowman, D.A., 2021, March. Fantastic voyage 2021: Using interactive VR storytelling to explain targeted COVID-19 vaccine delivery to antigen-presenting cells. In 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 695-696). IEEE.

Read Full Paper | See Prototype

Clean the ocean: An immersive vr experience proposing new modifications to go-go and wim techniques

Published in 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2022

Winner of the 3DUI Contest 2022

In this paper we present our solution to the 2022 3DUI Contest challenge. We aim to provide an immersive VR experience to increase player’s awareness of trash pollution in the ocean while improving the current interaction techniques in virtual environments. To achieve these objectives, we adapted two classic interaction techniques, Go-Go and World in Miniature (WiM), to provide an engaging minigame in which the user collects the trash in the ocean. To improve the precision and address occlusion issues in the traditional Go-Go technique we propose ReX Go-Go. We also propose an adaptation to WiM, referred to as Rabbit-Out-of-the-Hat to allow an exocentric interaction for easier object retrieval interaction.

Recommended citation: Lisle, L., Lu, F., Davari, S., Tahmid, I.A., Giovannelli, A., Llo, C., Pavanatto, L., Zhang, L., Schlueter, L. and Bowman, D.A., 2022, March. Clean the ocean: An immersive vr experience proposing new modifications to go-go and wim techniques. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 920-921). IEEE.

Read Full Paper | See Prototype

Evaluation of Pointing Ray Techniques for Distant Object Referencing in Model-Free Outdoor Collaborative Augmented Reality

Published in IEEE Transactions on Visualization and Computer Graphics, 2022

Referencing objects of interest is a common requirement in many collaborative tasks. Nonetheless, accurate object referencing at a distance can be challenging due to the reduced visibility of the objects or the collaborator and limited communication medium. Augmented Reality (AR) may help address the issues by providing virtual pointing rays to the target of common interest. However, such pointing ray techniques can face critical limitations in large outdoor spaces, especially when the environment model is unavailable. In this work, we evaluated two pointing ray techniques for distant object referencing in model-free AR from the literature: the Double Ray technique enhancing visual matching between rays and targets, and the Parallel Bars technique providing artificial orientation cues. Our experiment in outdoor AR involving participants as pointers and observers partially replicated results from a previous study that only evaluated observers in simulated AR. We found that while the effectiveness of the Double Ray technique is reduced with the additional workload for the pointer and human pointing errors, it is still beneficial for distant object referencing.

Recommended citation: Li, Y., Tahmid, I. A., Lu, F., & Bowman, D. A. (2022). Evaluation of pointing ray techniques for distant object referencing in model-free outdoor collaborative augmented reality. IEEE Transactions on Visualization and Computer Graphics, 28(11), 3896-3906.

Read Full Paper

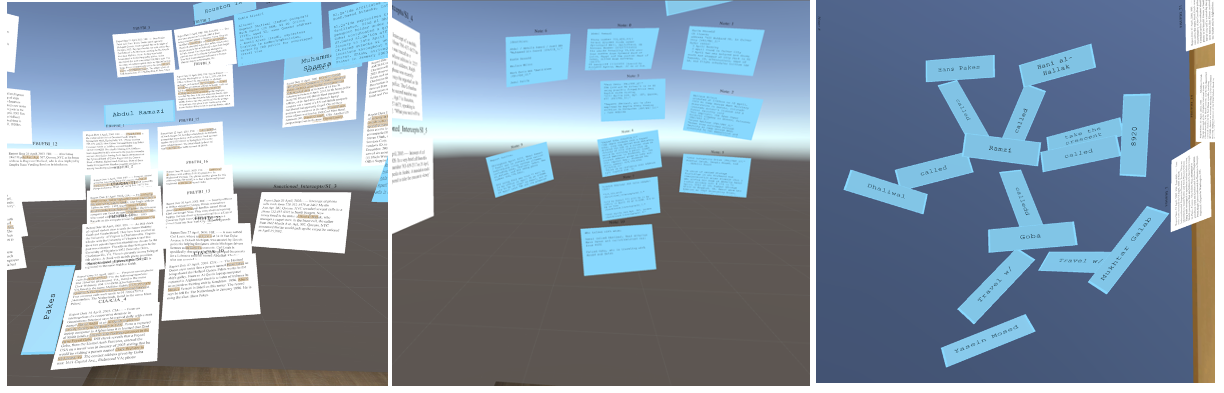

Evaluating the benefits of explicit and semi-automated clusters for immersive sensemaking

Published in 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2022

Immersive spaces have great potential to support analysts in complex sensemaking tasks, but the use of only manual interactions for organizing data elements can become tedious. We analyzed the user interactions to support cluster formation in an immersive sensemaking system, and we designed a semi-automated cluster creation technique that determines the user’s intent to create a cluster based on object proximity. We present the results of a user study comparing this proximity-based technique with a manual clustering technique and a baseline immersive workspace with no explicit clustering support. We found that semi-automated clustering was faster and preferred, while manual clustering gave greater control to users. These results provide support for the approach of adding intelligent semantic interactions to aid the users of immersive analytics systems.

Recommended citation: Tahmid, I. A., Lisle, L., Davidson, K., North, C., & Bowman, D. A. (2022, October). Evaluating the benefits of explicit and semi-automated clusters for immersive sensemaking. In 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (pp. 479-488). IEEE.

Read Full Paper | See Prototype

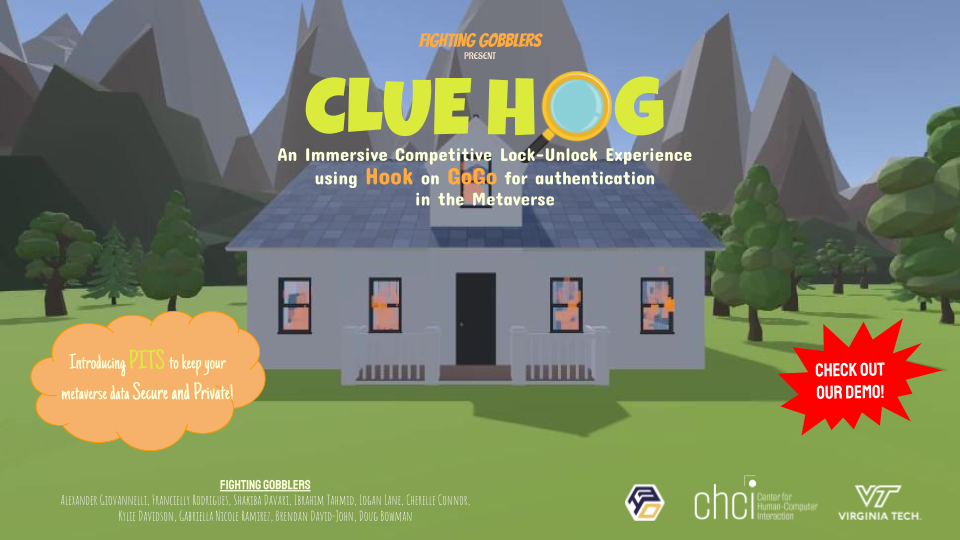

CLUE HOG: An Immersive Competitive Lock-Unlock Experience using Hook On Go-Go Technique for Authentication in the Metaverse

Published in 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2023

This paper presents our solution to the 2023 3DUI Contest challenge. Our goal was to provide an immersive VR experience to engage users in privately securing and accessing information in the Metaverse while improving authentication-related interactions inside our virtual environment. To achieve this goal, we developed an authentication method that uses a virtual environment’s individual assets as security tokens. To improve the token selection process, we introduce the HOG interaction technique. HOG combines two classic interaction techniques, Hook and Go-Go, and improves approximate object targeting and further obfuscation of user password token selections. We created an engaging mystery-solving mini-game to demonstrate our authentication method and interaction technique.

Recommended citation: Giovannelli, A., Rodrigues, F., Davari, S., Tahmid, I.A., Lane, L., Connor, C., Davidson, K., Ramirez, G.N., David-John, B. and Bowman, D.A., 2023, March. CLUE HOG: An Immersive Competitive Lock-Unlock Experience using Hook On Go-Go Technique for Authentication in the Metaverse. In 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 945-946). IEEE.

Read Full Paper | See Prototype

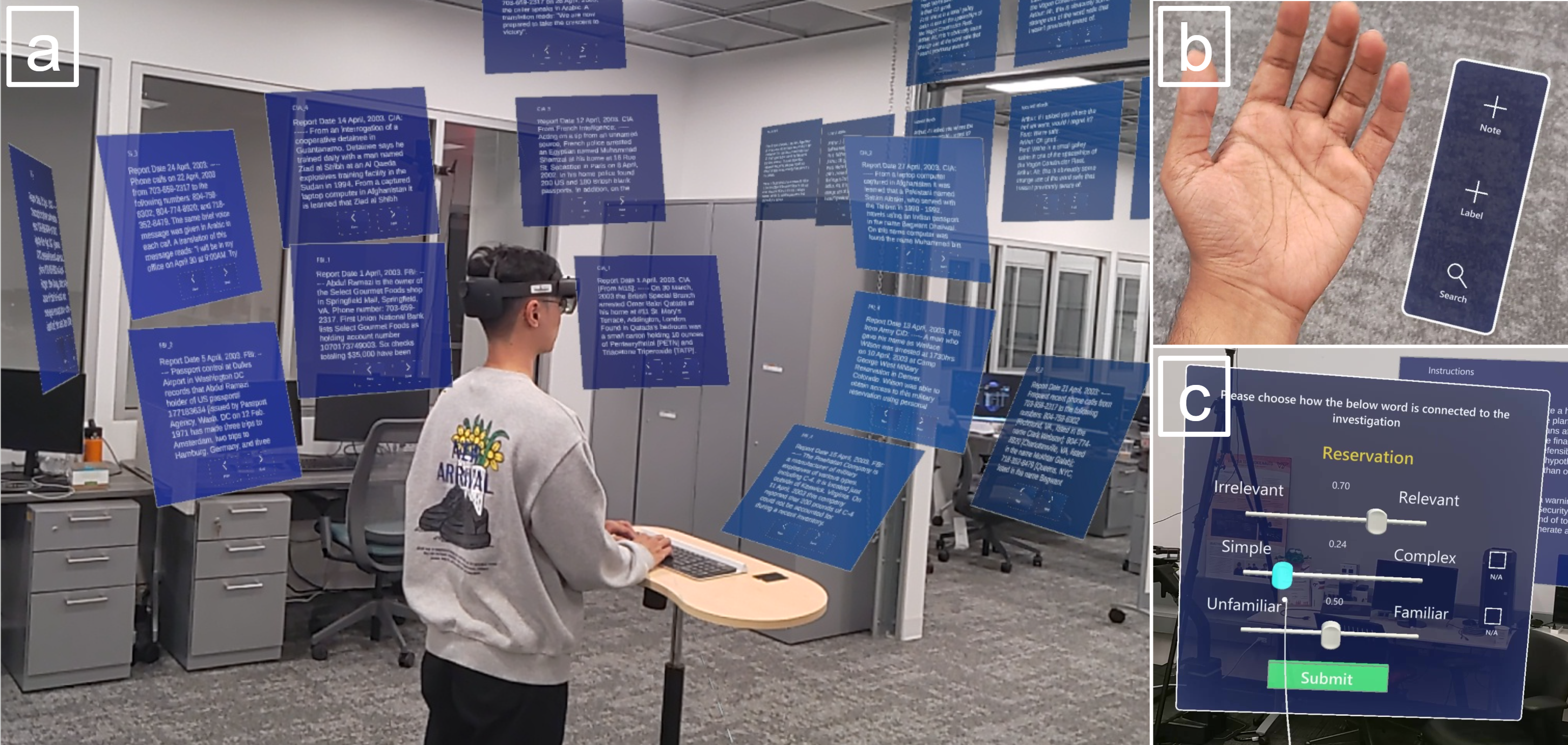

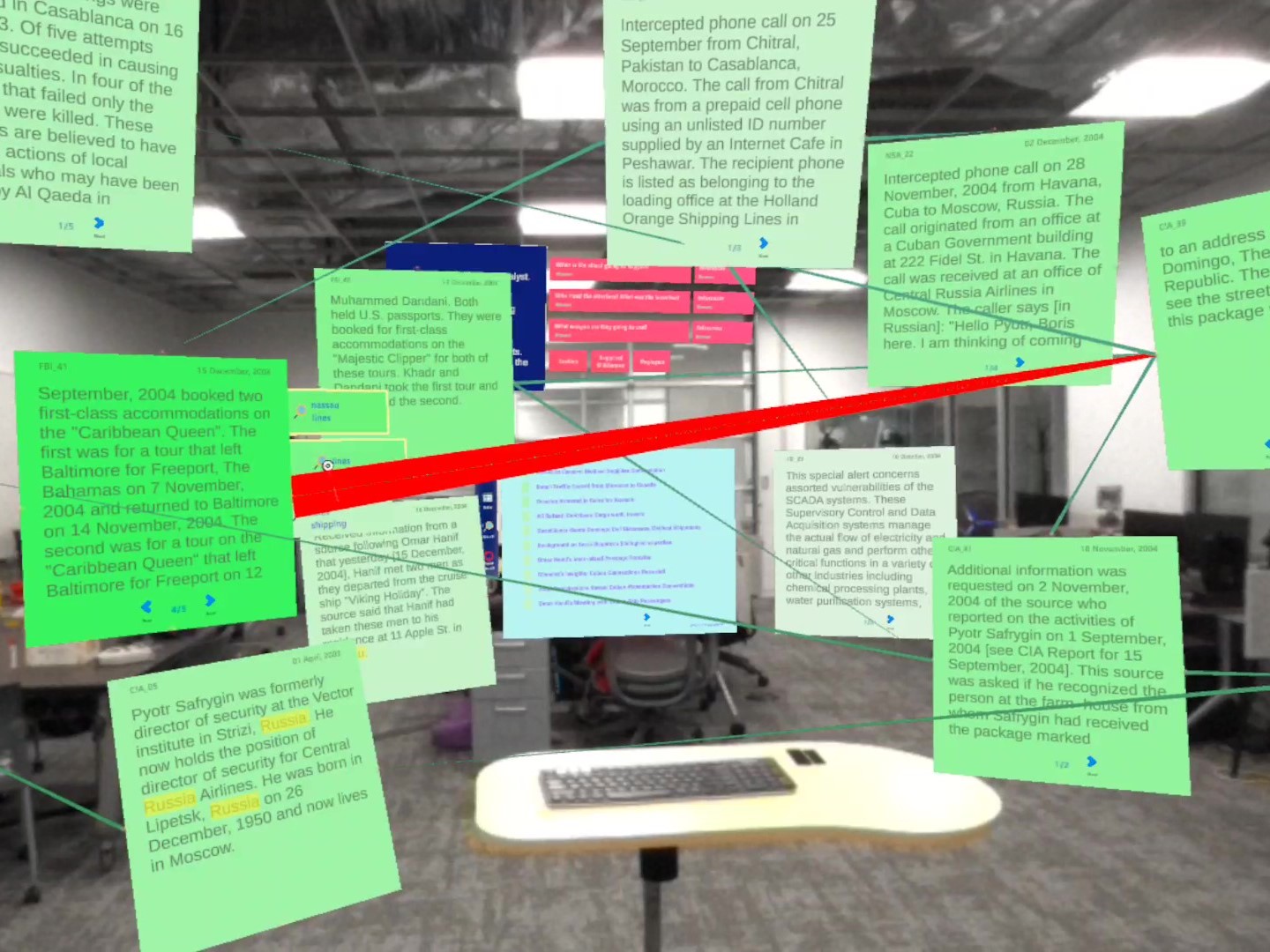

Evaluating the Feasibility of Predicting Information Relevance During Sensemaking with Eye Gaze Data

Published in 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2023

Eye gaze patterns vary based on reading purpose and complexity, and can provide insights into a reader’s perception of the content. We hypothesize that during a complex sensemaking task with many text-based documents, we will be able to use eye-tracking data to predict the importance of documents and words, which could be the basis for intelligent suggestions made by the system to an analyst. We introduce a novel eye-gaze metric called ‘GazeScore’ that predicts an analyst’s perception of the relevance of each document and word when they perform a sensemaking task. We conducted a user study to assess the effectiveness of this metric and found strong evidence that documents and words with high GazeScores are perceived as more relevant, while those with low GazeScores were considered less relevant. We explore potential real-time applications of this metric to facilitate immersive sensemaking tasks by offering relevant suggestions.

Recommended citation: Tahmid, I. A., Lisle, L., Davidson, K., Whitley, K., North, C., & Bowman, D. A. (2023, October). Evaluating the Feasibility of Predicting Information Relevance During Sensemaking with Eye Gaze Data. In 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (pp. 713-722). IEEE.

Read Full Paper | See Prototype

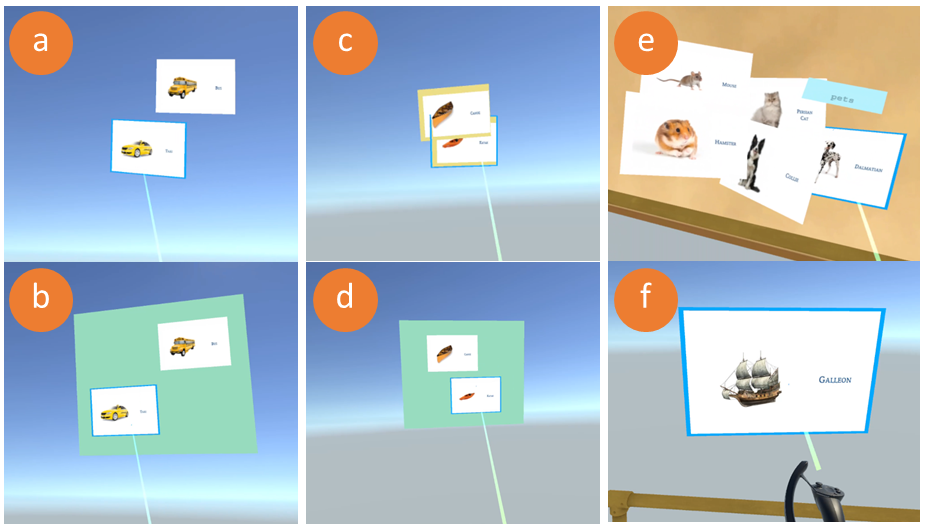

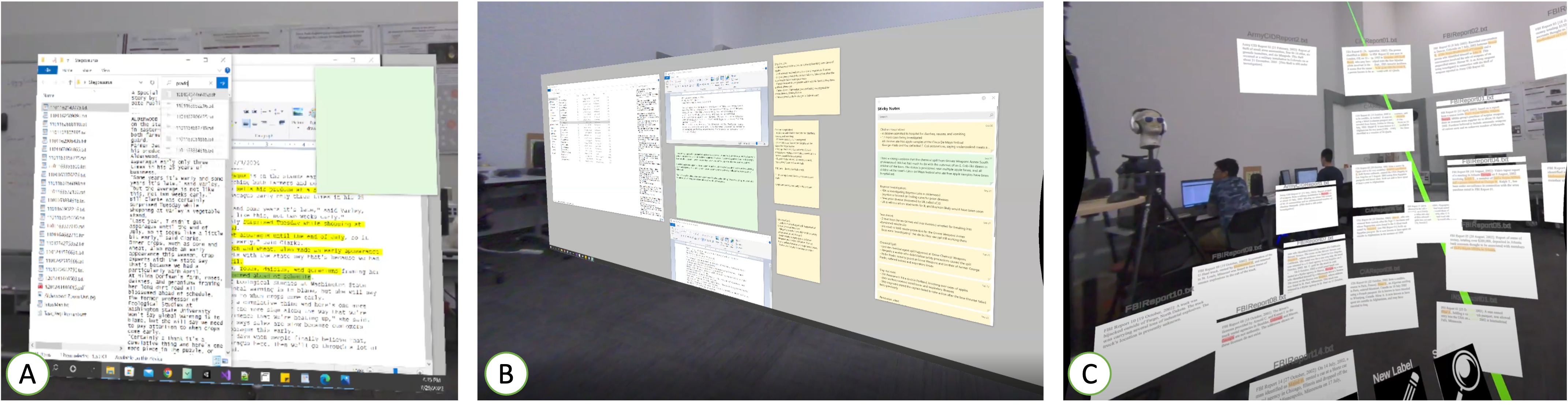

CoLT: Enhancing Collaborative Literature Review Tasks with Synchronous and Asynchronous Awareness Across the Reality-Virtuality Continuum

Published in 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), 2023

Collaboration plays a vital role in both academia and industry whenever we need to browse through a big amount of data to extract meaningful insights. These collaborations often involve people living far from each other, with different levels of access to technology. Effective cross-border collaborations require reliable telepresence systems that provide support for communication, cooperation, and understanding of contextual cues. In the context of collaborative academic writing, while immersive technologies offer novel ways to enhance collaboration and enable efficient information exchange in a shared workspace, traditional devices such as laptops still offer better readability for longer articles. We propose the design of a hybrid cross-reality cross-device networked system that allows the users to harness the advantages of both worlds. Our system allows users to import documents from their personal computers (PC) to an immersive headset, facilitating document sharing and simultaneous collaboration with both colocated colleagues and remote colleagues. Our system also enables a user to seamlessly transition between Virtual Reality, Augmented Reality, and the traditional PC environment, all within a shared workspace. We present the real-world scenario of a global academic team conducting a comprehensive literature review, demonstrating its potential for enhancing cross-reality hybrid collaboration and productivity.

Recommended citation: Tahmid, I. A., Rodrigues, F., Giovannelli, A., Lisle, L., Thomas, J., & Bowman, D. A. (2023, October). CoLT: Enhancing Collaborative Literature Review Tasks with Synchronous and Asynchronous Awareness Across the Reality-Virtuality Continuum. In 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 831-836). IEEE.

Read Full Paper | See Prototype

Spaces to Think: A Comparison of Small, Large, and Immersive Displays for the Sensemaking Process

Published in 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2023

Analysts need to process large amounts of data in order to extract concepts, themes, and plans of action based upon their findings. Different display technologies offer varying levels of space and interaction methods that change the way users can process data using them. In a comparative study, we investigated how the use of single traditional monitor, a large, high-resolution two-dimensional monitor, and immersive three-dimensional space using the Immersive Space to Think approach impact the sensemaking process. We found that user satisfaction grows and frustration decreases as available space increases. We observed specific strategies users employ in the various conditions to assist with the processing of datasets. We also found an increased usage of spatial memory as space increased, which increases performance in artifact position recall tasks. In future systems supporting sensemaking, we recommend using display technologies that provide users with large amounts of space to organize information and analysis artifacts.

Recommended citation: L. Lisle, K. Davidson, L. Pavanatto, I. A. Tahmid, C. North and D. A. Bowman, "Spaces to Think: A Comparison of Small, Large, and Immersive Displays for the Sensemaking Process," 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Sydney, Australia, 2023, pp. 1084-1093

Read Full Paper

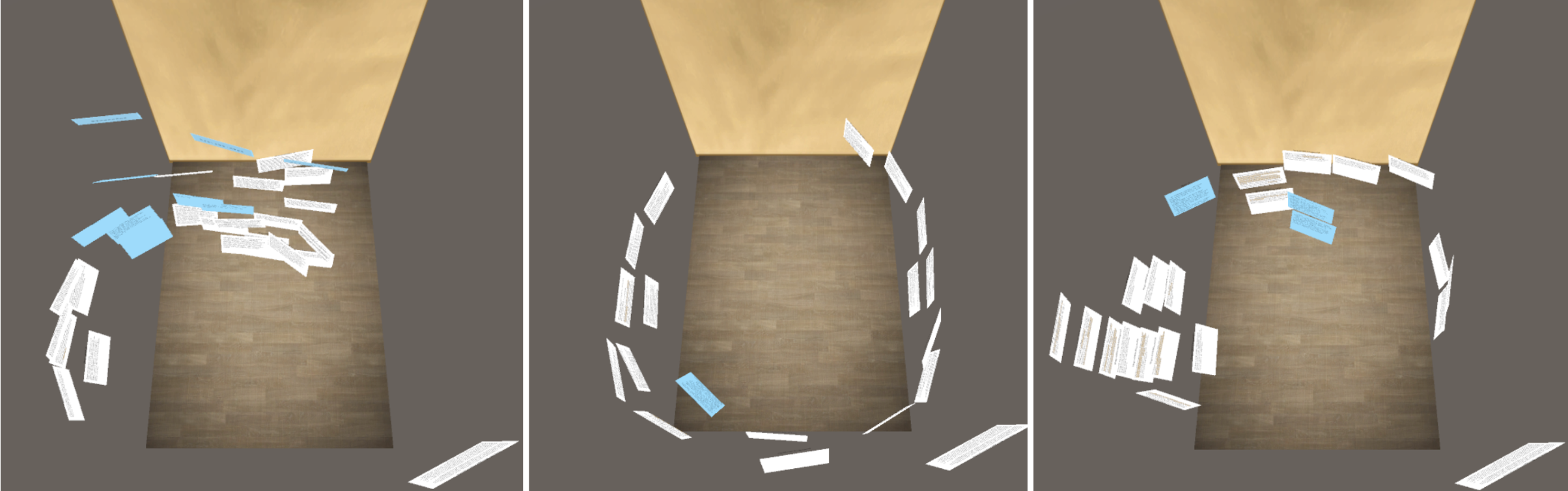

Uncovering Best Practices in Immersive Space to Think

Published in 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2023

As immersive analytics research becomes more popular, user studies have been aimed at evaluating the strategies and layouts of users’ sensemaking during a single focused analysis task. However, approaches to sensemaking strategies and layouts are likely to change as users become more familiar/proficient with the immersive analytics tool. In our work, we build upon an existing immersive analytics approach-Immersive Space to Think-to understand how schemas and strategies for sensemaking change across multiple analysis tasks. We conducted a user study with 14 participants who completed three different sensemaking tasks during three separate sessions. We found significant differences in the use of space and strategies for sensemaking across these sessions and correlations between participants’ strategies and the quality of their sensemaking. Using these findings, we propose guidelines for effective analysis approaches within immersive analytics systems for document-based sensemaking.

Recommended citation: Davidson, K., Lisle, L., Tahmid, I. A., Whitley, K., North, C., & Bowman, D. A. (2023, October). Uncovering Best Practices in Immersive Space to Think. In 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (pp. 1094-1103). IEEE.

Read Full Paper

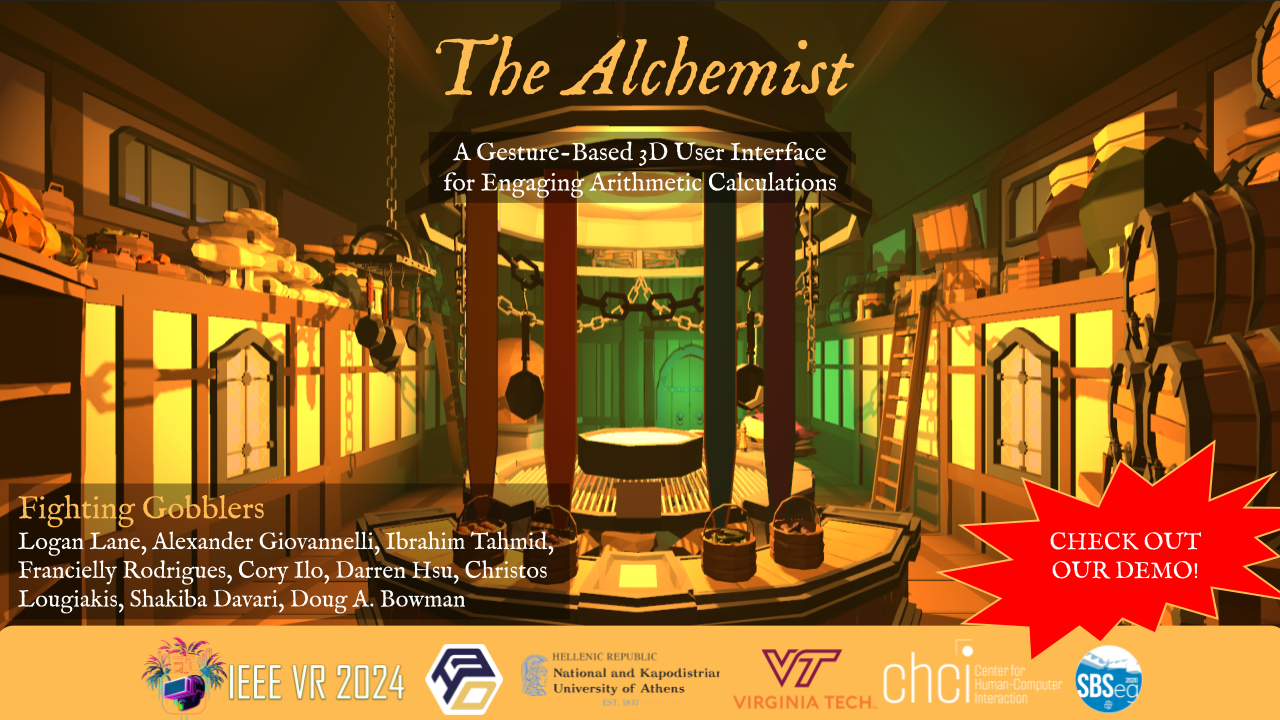

The Alchemist: A Gesture-Based 3D User Interface for Engaging Arithmetic Calculations

Published in 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2024

This paper presents our solution to the IEEE VR 2024 3DUI contest. We present The Alchemist, a VR experience tailored to aid children in practicing and mastering the four fundamental mathematical operators. In The Alchemist, players embark on a fantastical journey where they must prepare three potions to break a malevolent curse imprisoning the Gobbler kingdom. Our contributions include the development of a novel number input interface, Pinwheel, an extension of PizzaText [7], as well as four novel gestures, each corresponding to a distinct mathematical operator, designed to assist children in retaining practice with these operations. Preliminary tests indicate that Pinwheel and the four associated gestures facilitate the quick and efficient execution of mathematical operations.

Recommended citation: Lane, L., Giovannelli, A., Tahmid, I., Rodrigues, F., Ilo, C., Hsu, D., Lougiakis, C., Davari, S. and Bowman, D.A., 2024, March. The Alchemist: A Gesture-Based 3D User Interface for Engaging Arithmetic Calculations. In 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 1106-1107). IEEE.

Read Full Paper

Investigating Professional Analyst Strategies in Immersive Space to Think

Published in IEEE Transactions on Visualization and Computer Graphics, 2024

Existing research on sensemaking in immersive analytics systems primarily focuses on understanding how users complete analysis within these systems with quantitative and qualitative datasets. However, these user studies mainly concentrate on understanding analysis styles and methodologies from a predominantly novice user study population. While this approach provides excellent initial insights into what users may do within IA systems, it fails to address how professionals may utilize an immersive analytic system for analysis tasks. In our work, we build upon an existing immersive analytics concept - “Immersive Space to Think” to understand how professional user populations differ from novice users in immersive analytic system usage. We conducted a user study with 11 professional intelligence analysts who completed three analysis sessions each. Using our results from this study, we provide deep analysis into how professional users complete sensemaking within immersive analytic systems, compare our findings to previously published findings with a novice user population, and provide insights into how to develop better IA systems to support the professional analyst’s strategies within these systems.

Recommended citation: Davidson, K., Lisle, L., Tahmid, I. A., Whitley, K., North, C., & Bowman, D. A. (2024). Investigating Professional Analyst Strategies in Immersive Space to Think. IEEE Transactions on Visualization and Computer Graphics.

Read Full Paper

Enhancing Immersive Sensemaking with Gaze-Driven Smart Recommendations

Published in 30th Annual ACM Conference on Intelligent User Interface (IUI), 2025

Sensemaking is a complex task that places a heavy cognitive demand on individuals. With the recent surge in data availability, making sense of vast amounts of information has become a significant challenge for many professionals, such as intelligence analysts. Immersive technologies such as mixed reality offer a potential solution by providing virtually unlimited space to organize data. However, the difficulty of processing, filtering relevant information, and synthesizing insights remains. We proposed using eye-tracking data from mixed reality head-worn displays to derive the analyst’s perceived interest in documents and words, and convey that part of the mental model to the analyst. The global interest of the documents is reflected in their color, and their order on the list, while the local interest of the documents is used to generate focused recommendations for a document. To evaluate these recommendation cues, we conducted a user study with two conditions. A gaze-aware system, EyeST, and a Freestyle system without gaze-based visual cues. Our findings reveal that the EyeST helped analysts stay on track by reading more essential information while avoiding distractions. However, this came at the cost of reduced focused attention and perceived system performance. The results of our study highlight the need for explainable AI in human-AI collaborative sensemaking to build user trust and encourage the integration of AI outputs into the immersive sensemaking process. Based on our findings, we offer a set of guidelines for designing gaze-driven recommendation cues in an immersive environment.

Recommended citation: Tahmid, I. A., North, C., Davidson, K., Whitley, K., & Bowman, D. A. (2025, March). Enhancing Immersive Sensemaking with Gaze-Driven Smart Recommendations. In 2025 ACM Intelligent User Intefaces (IUI). ACM.

Read Full Paper | See Prototype

talks

Test Talk 1

Published:

Conference Proceeding talk 3 on Relevant Topic in Your Field

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

teaching

Teaching experience 1

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Teaching experience 2

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.